Product quality is something that can be difficult to describe. Nevertheless, we’re perfectly aware of it. Once experiencing a certain product, we can bring our instant judgment of its quality. It’s just something that we subjectively know and feel.

Ask each of your company’s departments to give a definition of product quality, and you’ll end up with entirely different answers. The marketing, operations, and economics department will have a very different idea of what a product quality is. So it can be suggested that this is rather a matter of perspective.

Let’s get back to a Pepsi Challenge campaign in the 80s. Pepsi was a new kid on the block at the time when Coke was undoubtedly more popular. When asked to give preference, participants largely chose Pepsi in the blind testing experiment. This case both shows the power of marketing and illustrates that product quality also has a lot to do with a client’s perception.

But is the situation different in software development? Keep reading to find out.

What is software quality?

The subjective view of product quality doesn’t work for quality assurance (QA) engineers. The QA professionals need to be able to use specific criteria to verify that the quality standards are in place.

Developing quality software means making it in a way that it operates as supposed. To make sure of it, the software quality engineers check the application against functional and non-functional requirements.

| Functional requirements | Non-functional requirements |

| The notion of “what” app does | The notion of “how” app does something |

| Apply to a specific part of the application | Apply to the whole application |

| Primary requirements | Secondary requirements |

| Based on user requirements | Based on user expectations |

| Features and functions, such as authentication, access level, reporting, transactions are tested | Reliability, usability, scalability, and performance are tested |

We covered that in greater detail in our article on functional vs non-functional testing, but here is a quick recap.

Functional requirements

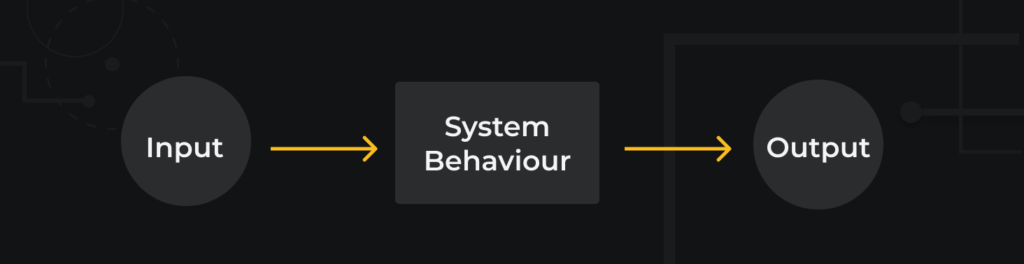

In software development, the function has a lot to deal with user input and the system’s response (system’s behavior and output).

Functional requirements tend to be more project-specific and related to a certain part of the system, rather than to the system as a whole. In general, functional requirements describe specific features and functions, such as authentication, access level, reporting, transactions, and such.

It’s common to specify functional requirements as use cases and user stories, given that this is an Agile environment.

An example of a functional requirement would be “a user must be directed to a thank you page after confirming the order” or “an SMS-code must be sent to a specified phone number to complete a registration”.

Non-functional requirements

Non-functional requirements concern the entire product. They are more general and are often viewed as a set of “best practices” rather than strict rules to be followed.

Functional requirements make sure the application serves its purpose while non-functional requirements help to go beyond the basics and exceed the client’s expectations. It’s the product attributes that make so-called added product value, in economic terms.

Non-functional requirements are not to be underestimated: they help you get an upper hand as far as the competition on the market is concerned.

A non-functional requirement would typically sound like “our website must comply with a GDPR policy” or “welcome emails must be sent within 5 minutes after registration is completed”.

How Software Quality Is Measured: 5 Essential Attributes

Interestingly enough, the question of quality is not a matter of a yes or no answer: it’s rather an extent. In the previous section, we discussed the ways of ensuring that software quality standards are in place. In this section, we’ll focus on measuring the degree to which these elements of a software quality system can be met.

ISO 25010 is a quality standard, known as “Systems and software engineering – Systems and software Quality Requirements and Evaluation (SQuaRE) – System and software quality models”. This standard provides the measurement of software quality and productivity characteristics, such as functional suitability, reliability, performance efficiency, usability, security, compatibility, maintainability, portability.

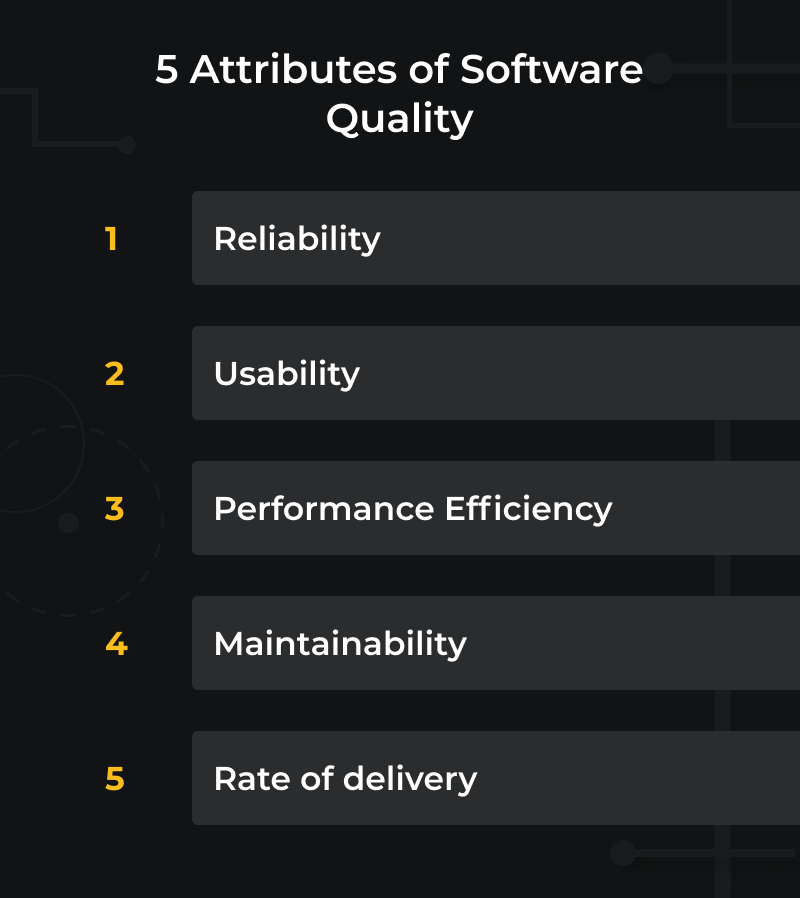

The Consortium for IT Software Quality (CISQ) consists of several industries executives that submitted ISO 25010 to criticism. They claimed that the ISO 25010 attributes were somewhat superficial and did not cover any code-related issues, rather dealing with the consequences of a poorly written code. So they made their own set by essentially using ISO 25010 and leaving out some of the non-essential attributes. CISQ standards include only reliability, efficiency, usability, maintainability, and rate of delivery.

Let’s get a closer look at CISQ standards: we added here a few more details that are worth your attention.

Reliability

How does your app perform under specific conditions? If you don’t have a definite answer to this question, perhaps, you won’t be fully aware of the degree of your app’s reliability. Reliability defines the likelihood of an app’s failure in cases like a product update or server migration. It provides ways to measure software quality through several metrics:

- Load testing. The QA specialists would launch a series of tests to define an app’s ability to handle the maximum number of concurrent users. The breakout point where the app loses stability defines the limits of its operational capacity.

- Regression testing. Every time an app undergoes significant updates or modifications, regression testing is performed to ensure none of the original functionality is compromised. The frequency with which such incidents can happen should give you an idea of the overall app’s resilience.

Read more: What is Regression Testing? Definition, Importance, and Process

- Average failure rate. This one gives you a high-level view of how frequently failures occur on average after the production deployment.

- High-priority bug ratio. This metric shows the portion of high-priority bugs compared to the overall amount of bugs, identified by your QA team.

This and some other important metrics we covered in the article on software testing metrics, explaining the crucial metrics that should be closely monitored by a CTO.

Usability

Measuring user experience involves representative users, so usability testing is usually implemented with the help of focus groups. Usability metrics are usually defined on a case-by-case basis, but they generally consist of different tasks that users are required to complete during a limited period of time.

Performance Efficiency

In CISQ terms, “efficient” is equivalent to “scalable”. But there’s more to it than just a well-written code: if your web servers can’t cope, your excellent code will come of little value. That creates certain segregation as far as different parts of your app are concerned, meaning that only certain elements are scalable but not the entire app. You measure the efficiency with the following attributes:

Stress testing. It’s similar in a way to load testing, however, the difference is that stress testing reveals the point where the system can operate no longer (not the point of performance decrease).

Soak testing. That’s your system’s endurance, so to speak: the load is applied for a long time to see when the system starts to fail. It really answers a question of when rather than where.

You can learn more about the performance testing metrics in our dedicated article

Maintainability

All the different choices (technology stack, programming languages) you make when getting started with the app’s development, directly affect your app’s maintainability. That can be the case when you have a large portion of legacy code or poorly written code in general. That would affect your ability to hand over your app’s maintenance from one development team to another. Here is how you measure your app’s maintainability:

- Code size. This is a rather simple approach: the more lines of code you get, the more effort is required to maintain it.

- Code writing best practices. Checking your code against the industry’s standards helps determine how well your code is written, according to the well-recognized benchmarks. Developing an industry-specific app (for instance, you can choose CERT or MISRA standard while making a solution for the automotive industry).

Rate of delivery

To modern-day standards, the delivery frequency pretty much depends on how much DevOps is adopted at your organization. The CI/CD pipeline helps to ship bug-free product updates – something you can’t allow to miss out on. Overall, you measure the rate of delivery by the frequency by which your updates are shipped to users. Pure and simple.

How Do You Know You’re Making Good Software?

The answer is pretty straightforward: you need proper quality assurance and quality control in place. What’s the difference between the two, you ask?

Well, quality assurance (QA) is a set of activities, aimed to ensure that quality requirements are met. That includes procedures, standards, governance, and similar.

Quality control (QC), on the other hand, has more to do with inspection activities. It assures that the abovementioned requirements are met by a set of specific inspection techniques and approaches.

The important thing to note here is that both are subject to quality management, and you need both to make products to certain standards and market expectations.

So Who Is Responsible for Software Quality?

The software development team is the one that produces code. It’s the QA department’s job to catch bugs, sure. But the software development team is the one responsible for fixing the bugs. All in all, software quality is a shared responsibility.

Read more: Who Owns Quality in a Scrum Team and How QA Fits With Agile

A good idea is to cultivate the code ownership culture at your company. At Facebook, developers are held responsible for the code they produce, assistance with testing, and production deployment.

We suggest – don’t just pick up specific individuals and make them whipping boys. That doesn’t make for a great team spirit. Consider instead a common responsibility for the product quality.